Continuous Distributions

Contents

Continuous Distributions#

Today we’ll talk about continuous distributions - probability distributions of continuous random variables.

We will start by reviewing some concepts from integral calculus. Then we will apply these concepts to answer various probability questions and compute expectation and variance of continuous random variables. In the final part of the lecture we will focus the common continuous distributions, such as the continuous version of the uniform distribution.

Review of Integrals#

Area Under the Curve#

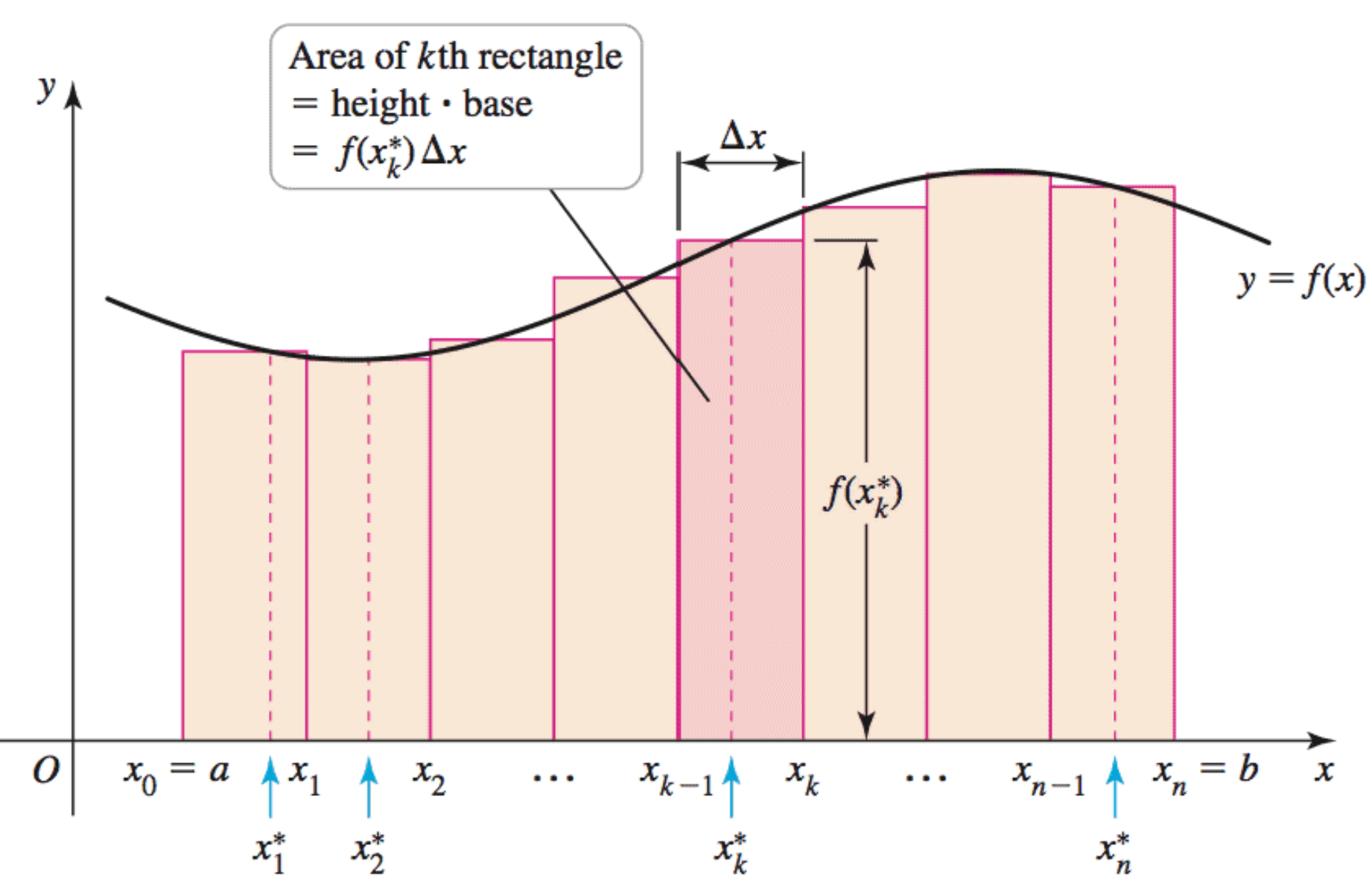

Consider a function \(f\) defined for \(a\leq x \leq b\). We divide the interval \([a,b]\) into \(n\) subintervals of equal width, \(\Delta x = \frac{b-a}{n}.\) In addition, we let \(x_0 = a, x_1, x_2, \dots, x_n = b\) be the endpoints of these intervals. Finally, we let \(x_1^{\ast}, x_2^{\ast}, \dots, x_n^{\ast}\) be any sample points within these intervals, so \(x_i^{\ast}\) lies in the \(i\)th subinterval \([x_{i-1}, x_i]\).

Then \(\int_a^b f(x) dx\) can be viewed as the sum \(\sum_{k=1}^n f(x_k^{\ast}) \Delta x\) for \(n\) approaching infinity.

Mathematically, we write this as

The sum \(\sum_{k=1}^n f(x_k^{\ast}) \Delta x\) is called a Riemann sum after the German mathematician Bernhard Riemann.

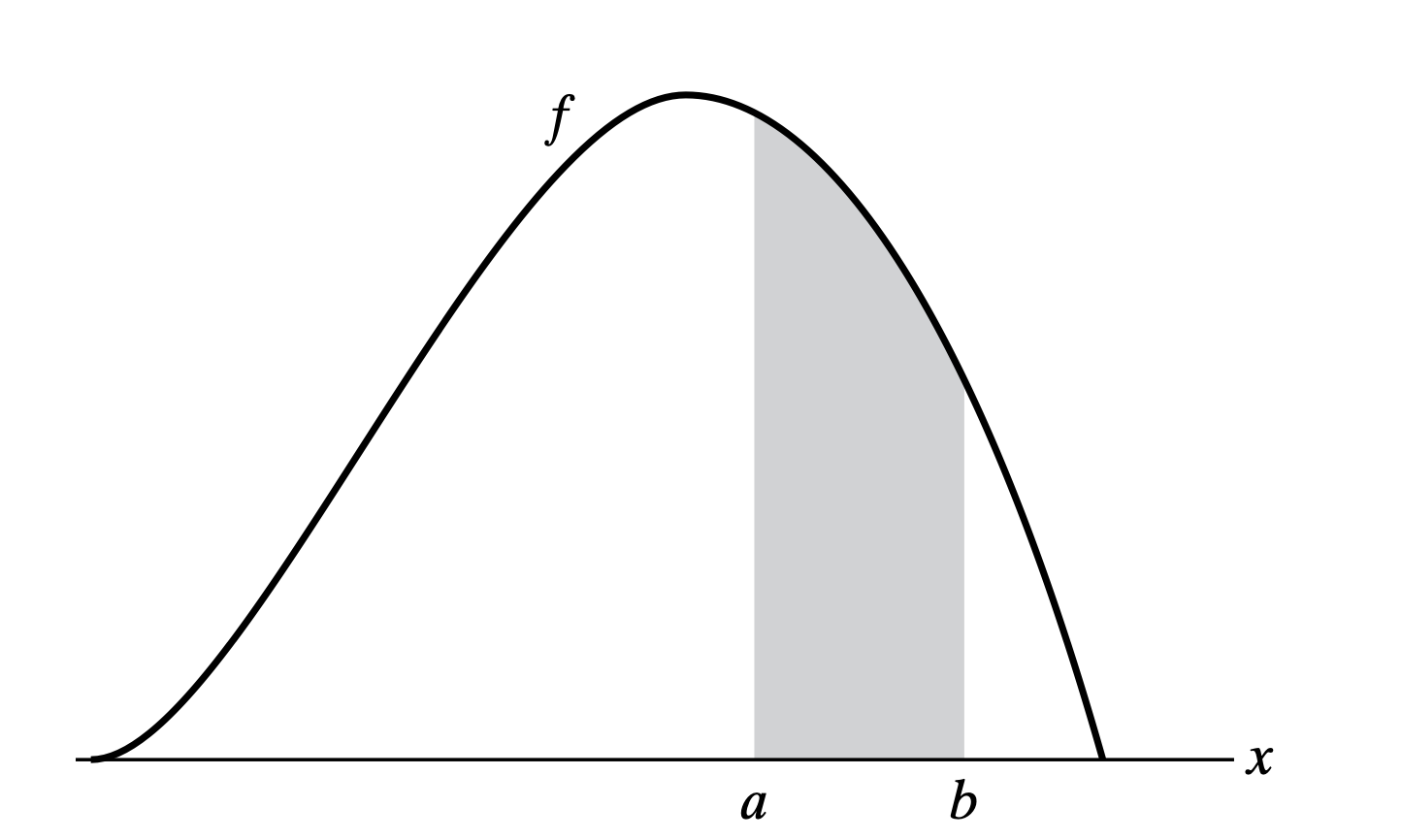

If the function \(f\) is positive (assumes only positive values) then the Riemann sum can be interpreted as a sum of areas of approximating rectangles. Therefore, for a positive \(f\) the integral \(\int_a^b f(x) dx\) is the area under the curve \(y=f(x)\) from \(a\) to \(b\).

The symbol \(\int\) was introduced by Leibniz and is called an integral sign. It is an elongated \(S\) and was chosen because an integral is a limit of sums.

In the notation \(\int_a^bf(x) dx\), \(f(x)\) is the integrand and \(a\) and \(b\) are called the limits of integration: \(a\) is the lower limit and \(b\) is the upper limit.

The symbol \(dx\) has no official meaning by itself.

A definite integral is the integral that represents the area under a curve between two fixed limits of integration.

We say that a function \(f\) defined on \([a,b]\) is integrable on \([a,b]\) if \(\lim_{n \to \infty} \sum_{k=1}^n f(x_k^{\ast}) \Delta x\) exists.

Properties of the Integral#

Here are some very important properties of definite integrals.

Let \(f\) and \(g\) be integrable functions on \([a,b]\) and let \(c\) be any constant.

\(\int_a^a f(x) dx = 0\).

\(\int_b^a f(x) dx = -\int_a^b f(x) dx\).

\(\int_a^b \left(f(x)+g(x)\right) dx = \int_a^b f(x)dx + \int_a^b g(x)dx\).

\(\int_a^b c f(x) dx = c \int_a^b f(x) dx\).

\(\int_a^b f(x) dx = \int_a^p f(x) dx + \int_p^b f(x)dx\) for any \(p \in [a,b].\)

The Fundamental Theorem of Calculus#

The Fundamental Theorem of Calculus establishes a connection between the two branches of calculus: differential calculus and integral calculus. Differential calculus arose from the tangent problem, whereas the integral calculus arose from a seemingly unrelated problem, the area problem.

Isaac Barrow discovered that these two problems are actually closely related. In fact, he realized that differentiation and integration are inverse processes. The Fundamental Theorem of Calculus gives the precise inverse relationship between the derivative and the integral.

A function \(F\) is called an antiderivative of \(f\) on an interval \(I\) if \(F'(x) = f(x)\) for all \(x\) in \(I\).

Example. Let \(f(x) = x^2\). If \(F(x) = \frac{1}{3}x^3\), then \(F'(x) = x^2 = f(x).\) Thus, \(F(x) = \frac{1}{3}x^3\) is an antiderivative of \(f(x) = x^2\). It should be noted that \(F_1(x) = \frac{1}{3}x^3 + 100\) also satisfies \(F_1'(x) = x^2\) and, hence, is also an antiderivative of \(f(x) = x^2\).

The Fundamental Theorem of Calculus. Part 1. If \(f\) is continuous on \([a,b]\), then the function \(g\) defined by

is continuous on \([a,b]\) and differentiable on \((a,b)\), and \(g'(x) = f(x)\).

The Fundamental Theorem of Calculus. Part 2. If \(f\) is continuous on \([a,b]\), then

where \(F\) is any antiderivative of \(f\), that is, a function such that \(F' = f\).

Both parts of the Fundamental Theorem of Calculus establish connections between antiderivatives and definite integrals. Part 1 says that if \(f\) is continuous, then \(\int_a^x f(t) dt\) is an antiderivative of \(f\). Part 2 says that \(\int_a^b f(x) dx\) can be found by evaluating \(F(b) - F(a)\).

We need a convenient notation for antiderivatives that makes them easy to work with. Traditionally, the notation \(\int f(x) dx\) is used for antiderivatives of \(f\) and is called an indefinite integral.

This implies that

For example, we can write

So we can regard an indefinite integral as representing an entire family of functions, one antiderivative for each value of the constant \(C\).

You should distinguish carefully between definite and indefinite integrals. A definite integral \(\int_a^b f(x) dx\) is a number, whereas an indefinite integral \(\int f(x) dx\) is a function (or a family of functions).

The connection between definite and indefinite integrals is given by Part 2 of the Fundamental Theorem. If \(f\) is continuous on \([a,b]\), then

Elementary Integrals#

Here is the list of integrals that you should know for this course.

\(\int_a^b c dx = c(b - a)\) for any constant \(c\),

\(\int_a^b x^n dx = \frac{1}{n+1}\left[x^{n+1}\right]_{x=a}^{x=b}\) for \(n \neq -1\),

\(\int_a^b \frac{1}{x}dx = \left[\ln |x| \right]_{x=a}^{x=b}\),

\(\int_a^b e^x dx = \left[ e^x \right]_{x=a}^{x=b}\).

Examples.

Find \(\int_{-2}^2 (2 + 5x) dx.\)

\[\int_{-2}^2 (2 + 5x) dx = \int_{-2}^2 2 dx + \int_{-2}^2 5 x dx = 2(2-(-2)) + 5 \left[\frac{1}{2}x^2\right]_{x=-2}^{x=2} = 8 + \frac{5}{2} (2^2 - (-2)^2) = 8 + 0 =8.\]

Find the area under the parabola \(y=x^2\) from 0 to 1. The required area is equal to

\[\int_0^1 x^2 dx = \left[\frac{1}{3}x^3 \right]_{x=0}^{x=1} = \frac{1}{3}\left[x^3 \right]_{x=0}^{x=1} = \frac{1}{3} (1^3 - 0^3) = \frac{1}{3}.\]

Evaluate \(\int_3^6 \frac{1}{x}dx.\)

Improper Integrals#

So far we assumed that a definite integral \(\int_a^b f(x) dx\) was defined on a finite interval \([a,b]\). An improper integral extends the concept of a definite integral to infinite intervals.

Definition. If \(\int_a^t f(x) dx\) exists for every number \(t \geq a\), then

provided this limit exists (as a finite number).

Definition. If \(\int_t^b f(x) dx\) exists for every number \(t \leq b\), then

provided this limit exists (as a finite number).

Definition. The improper integrals \(\int_a^{\infty} f(x)dx\) and \(\int_{-\infty}^b f(x)dx\) are called convergent if the corresponding limits exist. They are called divergent if the limits do not exist.

Definition. If both \(\int_a^{\infty} f(x)dx\) and \(\int_{-\infty}^b f(x)dx\) are convergent, then we define

where \(a\) is any real number.

Example. Determine whether the integral \(\int_1^{\infty} (1/x) dx\) is convergent or divergent.

Solution.

Important: The notation in the Review of Integrals is unrelated to the notation in the remaining part of the lecture.

Probability Density Function#

Continuous random variables are described by their probability density functions (PDFs). If \(f\) is a PDF, then it has the following properties:

\( f(x) \geq 0,\)

\( \int_{- \infty}^{\infty} f(x) dx = 1.\)

In addition, if \(F\) is the corresponding CDF, then

\(f(x) = \frac{d F(x)}{dx},\) and

\(F(a) = P(X\leq a) = \int_{-\infty}^a f(x) dx.\)

The probability density function plays a central role in probability theory, because all probability statements about a continuous random variable can be answered in terms of its PDF. For instance, for a continuous variable \(X\) from \(P(X\leq a) = \int_{-\infty}^a f(x) dx\), we obtain

Note: If we let \(a = b\), then

Although this result might seem strange initially, it is really quite natural. Imagine that a continuous variable had a positive probability of being any particular value, then the same should hold for all its possible values. In that case, the total sum of the probabilities would be infinite.

Example. Suppose that \(X\) is a continuous random variable with probability density function

Here \(c\) is a constant.

a. What is the value of \(c\)?

b. Find \(P(X>1).\)

Solution. a. Since \(f\) is a probability density function, we must have \(\int_{-\infty}^{\infty} f(x)dx = 1.\) This is equivalent to

because the PDF is non-zero only on the interval \((0,2)\).

Let us first compute the integral

Since \(\frac{8}{3}c = 1\), we find that \(c = \frac{3}{8}.\)

b.

Expected Value#

We define the expected value of a continuous random variable \(X\) as

Since often the set of possible values of \(X\) is restricted to a certain interval, we can use definite integrals to compute the expected values using the above definition.

Example. Find \(E[X]\) when the density function of \(X\) is

Solution.

Variance#

We have defined the variance of a random variable \(X\) as

We also said that for a discrete random variable with PMF \(p\) and \(\overline{X} = \mu\), the variance can be computed from

Similarly, for a continuous random variable with density function \(f(x)\) and \(\overline{X} = \mu\)

The Continuous Uniform Distribution#

What if I ask you to choose a random number between 0 and 1, inclusive 0 and 1?

You might pick 1, or 0.5, or 0.01, or 0.9999997, etc. Any number from the interval \([0,1]\) would have the same probability of being picked. This is an example of a uniformly distributed continuous random variable.

Definition. The PDF of a continuous uniform distribution on \([0,1]\) is given by

This is called uniform density on \([0,1]\).

For a general interval \([a,b]\), this function would become

The expected value and the variance of a continuous uniform distribution are, respectively

Example. Let \(X\) be a continuous random variable that is uniformly distributed over \([0,10]\). Find \(E[X].\)

Solution. Our intuition tells us that \(E[X]\) should be equal to 5, the midpoint of \([0,10].\) Let us confirm this by a computation.

Let us look next at a more interesting example.

Example. Buses arrive at a specified stop at 15-minute intervals starting at 7 am. That is, they arrive at 7, 7:15, 7:30, 7:45, and so on. If a passenger arrives at the stop at a time that is uniformly distributed between 7 and 7:30, find the probability that he waits less than 5 minutes for a bus.

Solution. Let \(X\) denote the number of minutes past 7 that the passenger arrives at the stop. Since \(X\) is a uniform random variable over the interval \((0, 30)\), it follows that the passenger will have to wait less than 5 minutes if (and only if) he arrives between 7:10 and 7:15 or between 7:25 and 7:30. Hence, the desired probability is

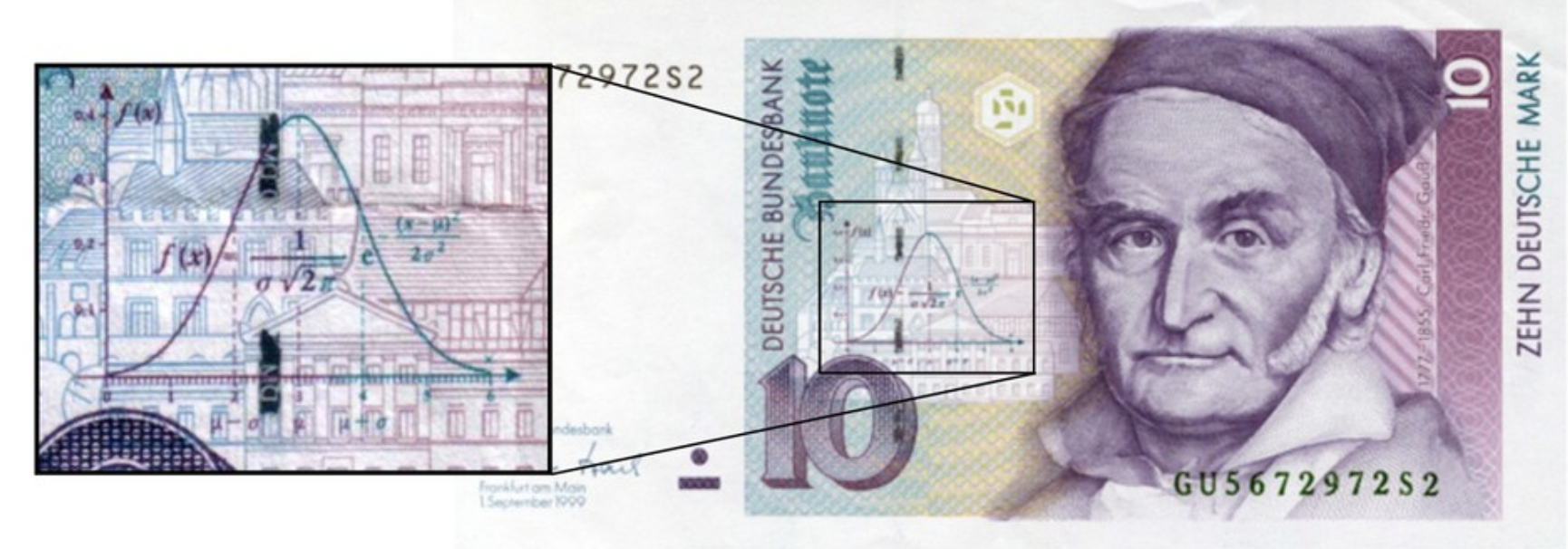

The Gaussian Distribution#

The Gaussian distribution is also called the normal distribution.

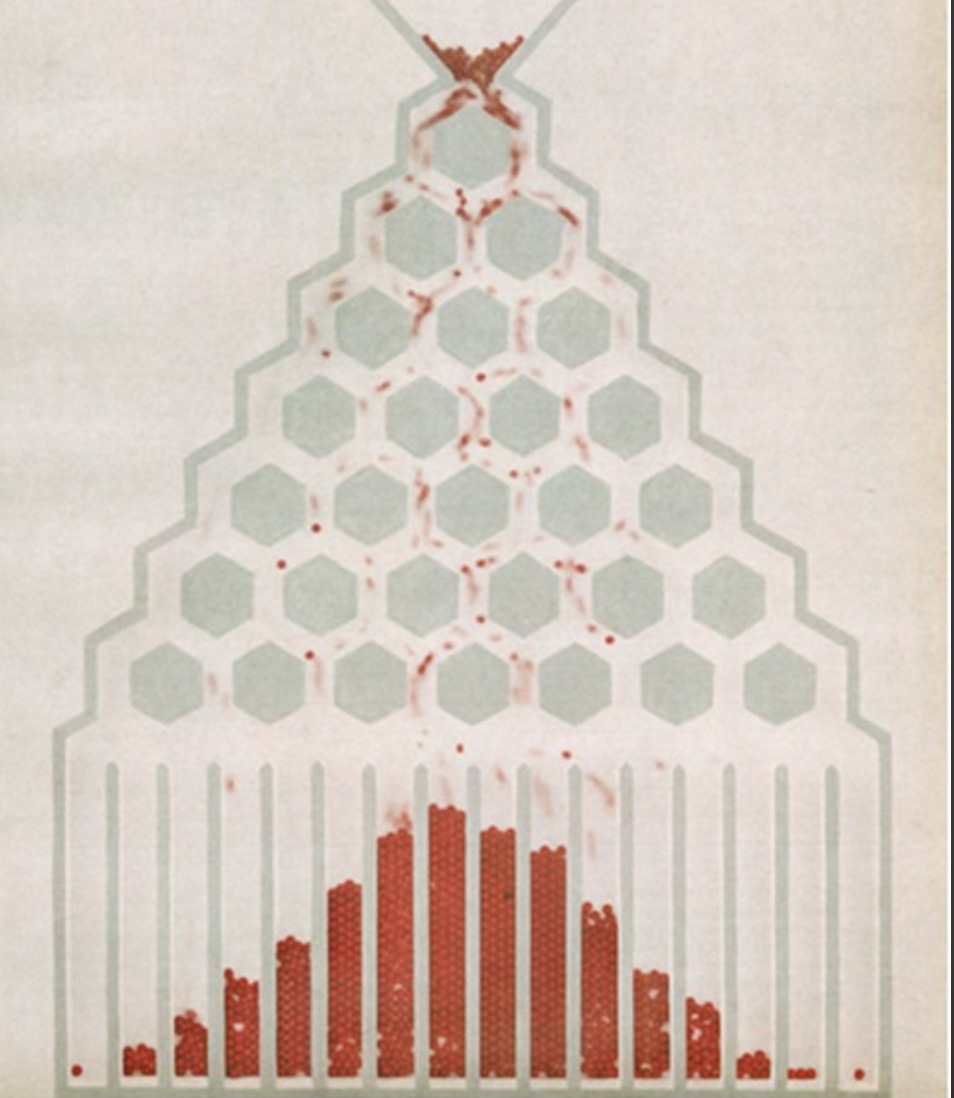

One way of thinking of the Gaussian distribution is that it is the limit of the Binomial when \(N\) is large, that is, the limit of the sum of many Bernoulli trials.

However, sums of other random variables (not just Bernoulli trials) converge to the Gaussian distribution as well.

We will make extensive use of Gaussian distribution! The celebrated Central Limit Theorem (CLT) is the main reason we will use it so much. Informally, the CLT states the following.

The sum of a large number of independent observations from any distribution with finite variance tends to have a Gaussian distribution.

The normal distribution has two parameters: the mean and the variance, \(\mu\) and \(\sigma^2\), respectively.

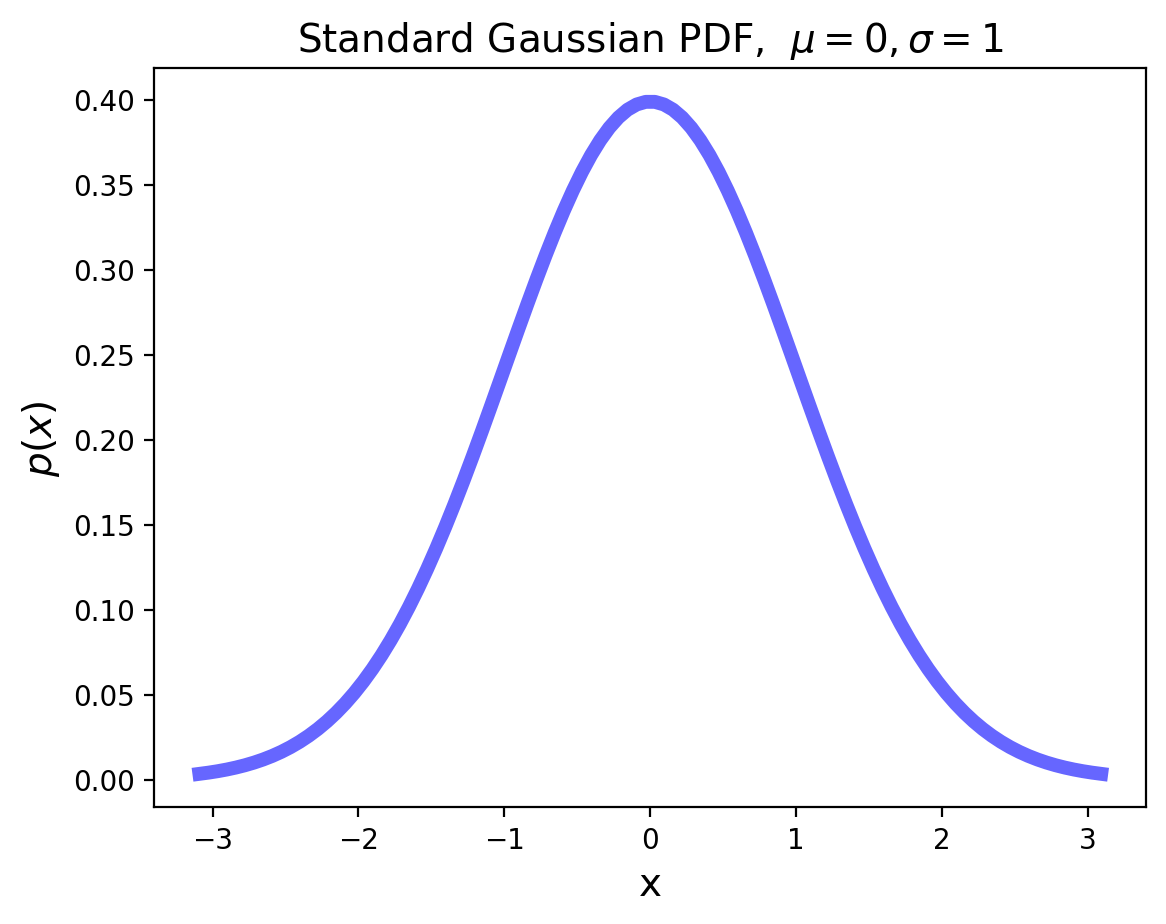

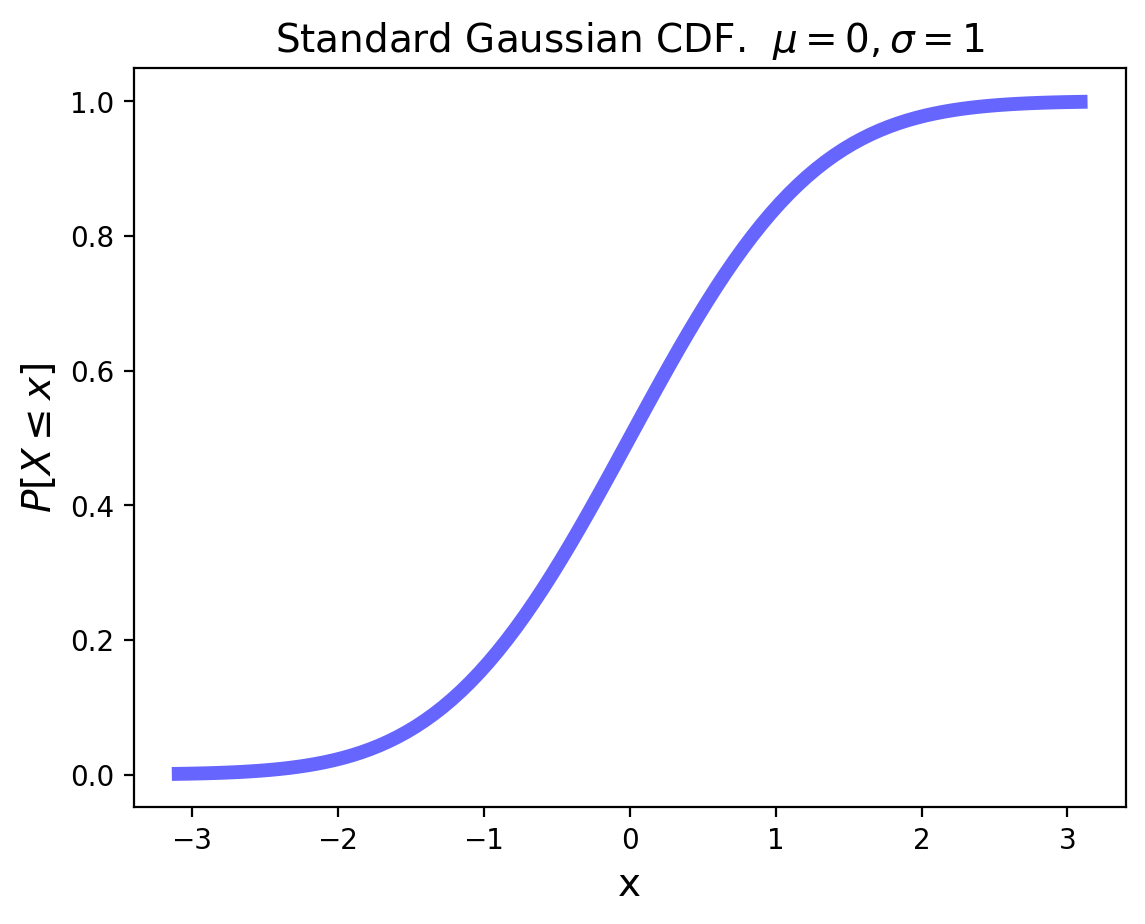

The standard Gaussian distribution has mean zero and a variance (and standard deviation) of 1. The PDF of the standard Gaussian is:

For an arbitrary Gaussian distribution with mean \(\mu\) and variance \(\sigma^2\), the PDF is simply the standard Gaussian that is relocated to have its center at \(\mu\) and its width scaled by \(\sigma\):

The Exponential Distribution#

The exponential distribution is an example of a continuous distribution. It concerns a Poisson process and has one parameter, \(\lambda\), the rate of success. The exponential distribution answers the question: “What is the probability it takes time \(x\) to obtain the first success?’’

Definition. A continuous random variable whose PDF is given, for some \(\lambda > 0\), by

is said to be exponentially distributed with parameter \(\lambda\).

Its mean is \(1/\lambda\), and the variance is \(1/\lambda^2\).

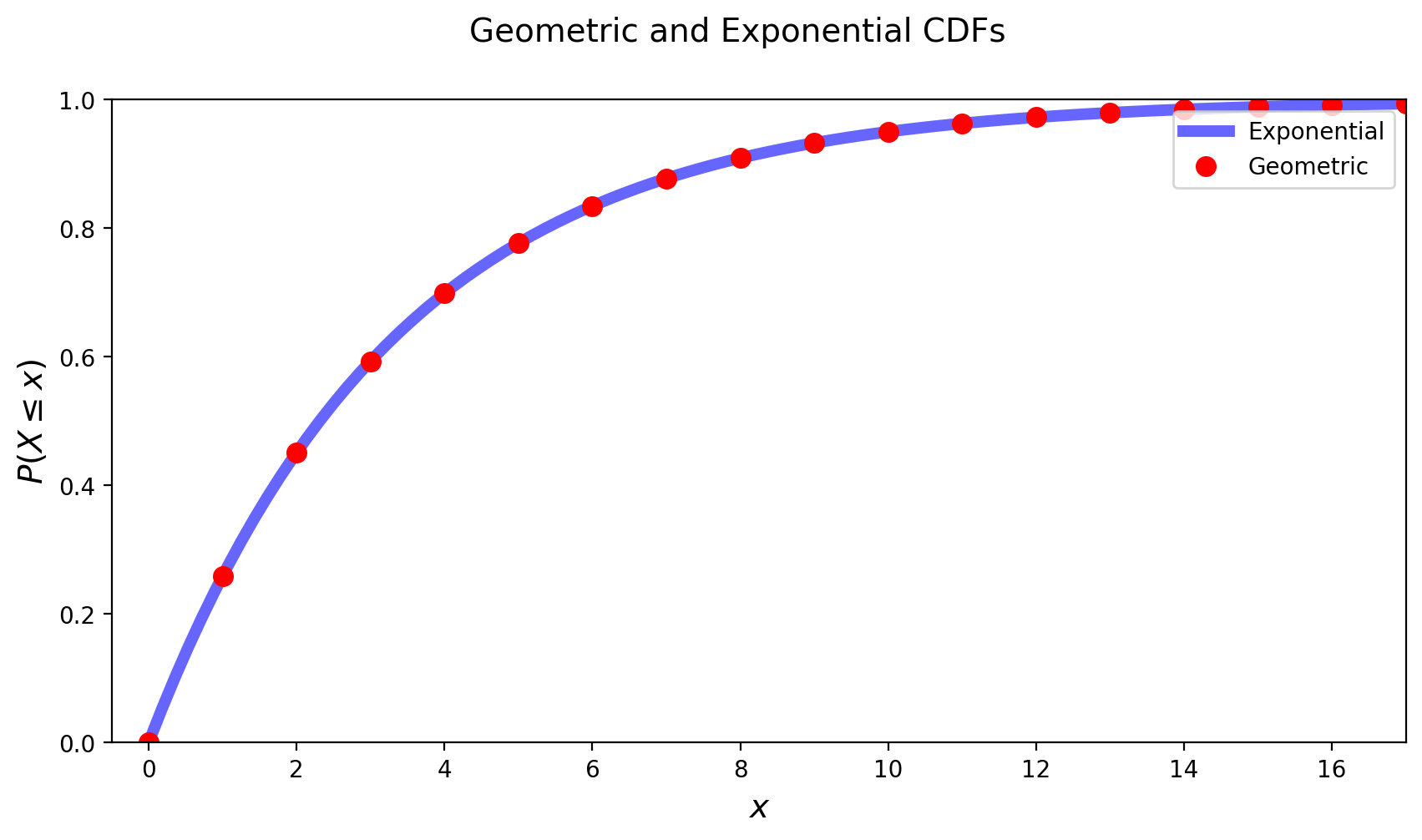

The CDF, \(F(a)\), of an exponential random variable is given by

The exponential is the continuous analog of the geometric distribution.

In the last two lectures we introduced the binomial, geometric, Poisson, and exponential distributions. Each of these four distributions describes either

Bernoulli trials with probability \(p\), or

Poisson process with rate \(\lambda\).

In addition, each of the four distributions answers one of the following questions:

Given that a success has just occured, how many trials or how long until the next success?

In a fixed number of trials or amount of time, how many successes occur?

Based on this, we can create a table that might help us memorize these four distributions:

Time (or Number of Trials) Until Success |

Number of Successes in Fixed Time (or Number of Trials) |

|

|---|---|---|

Bernoulli trials |

Geometric |

Binomial |

Poisson process |

Exponential |

Poisson |