Linear Independence

Many parts of this page are based on Linear Algebra and its Applications, by David C. Lay

We start by returning the question: when does \(A\mathbf{x} = \mathbf{b}\) have a solution \(\mathbf{x}\)?

That is, when is \(A\mathbf{x} = \mathbf{b}\) consistent?

In the last lecture, we learned that \(A{\bf x} = {\bf b}\) is consistent if and only if \(\bf b\) lies in the span of the columns of \(A.\)

As an example, we saw for the following matrix \(A\):

\[A = \left[\begin{array}{rrr}1&3&4\\-4&2&-6\\-3&-2&-7\end{array}\right]\]

\(A{\bf x} = {\bf b}\) is not consistent for all \({\bf b}\).

We realized that was because the span of \(A\)’s columns is not all of \(\mathbb{R}^3\), but rather only a part of \(\mathbb{R}^3\) – namely, a plane lying within \(\mathbb{R}^3\).

So, when \(\bf b\) does not lie in that plane, then \(A{\bf x} = {\bf b}\) is not consistent and has no solution.

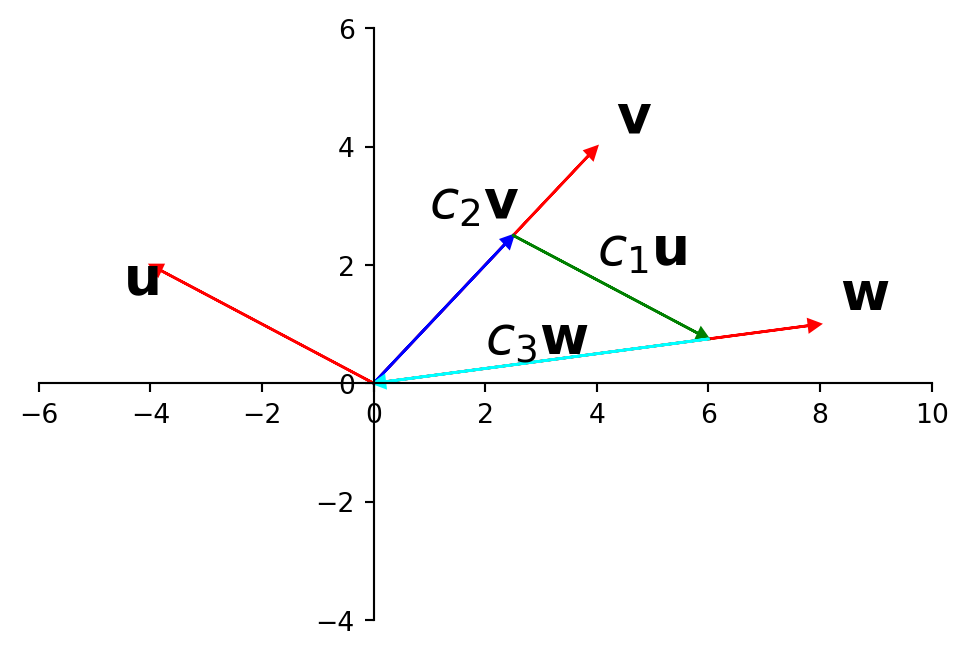

As a reminder, here is the picture of the span of the columns of \(A\).

Clearly, \(\bf 0, a_1, a_2,\) and \(\bf a_3\) have a particular relationship:

namely, they all lie within the same plane – even though the vectors are in \(\mathbb{R}^3\).

This is a special relationship. It only happens under certain circumstances.

That is, it is not the case in general that four points in \(\mathbb{R}^3\) would lie in the same plane!

Today we will talk about how to define this relationship precisely for vectors of arbitrary dimension, that is, vectors in \(\mathbb{R}^n\).

Linear Dependence

The relationship between these four vectors is called linear dependence.

Before stating the definition, let’s get a sense intuitively of what we want to capture.

We make this observation:

- the plane defined by \(\mathbf{a_1}, \mathbf{a_2}, \mathbf{a_3}\) happens to include the origin.

That’s one way of capturing the special relationship among \({\bf a_1, a_2,}\) and \({\bf a_3}.\)

Here is the formal definition:

A set of vectors \(\{{\bf v_1, ..., v_p}\}\) all of which are in \(\mathbb{R}^n\) is said to be linearly dependent if there exist weights \(\{c_1, ..., c_p\},\) not all zero, such that

\[c_1{\bf v_1} + ... + c_p{\bf v_p} = {\bf 0}.\]

Can you see how this definition captures our intuition about the special relationship of the four vectors?

Conversely, the set \(\{{\bf v_1, ..., v_p}\}\) is said is said to be linearly independent if the vector equation

\[c_1{\bf v_1} + ... + c_p{\bf v_p} = {\bf 0}.\]

has only the trivial solution \(c_1 = 0, ..., c_p = 0\).

A set of nonzero weights that yield zero is called a linear dependence relation among \(\{{\bf v_1, ..., v_p}\}\).

A set of vectors is linearly dependent if and only if it is not linearly independent.

Testing if a Set of Vectors is Linearly (In)dependent

Let’s work out how we would test, algebraically, whether a set of vectors is linearly dependent.

We’ll use a specific example.

Let \({\bf v_1} = \left[\begin{array}{r}1\\2\\3\end{array}\right], {\bf v_2} = \left[\begin{array}{r}4\\5\\6\end{array}\right],\) and \({\bf v_3} = \left[\begin{array}{r}2\\1\\0\end{array}\right].\)

Let’s determine (a) if the set \(\{{\bf v_1, v_2, v_3}\}\) is linearly independent, and (b) if not, a linear dependence relation among them.

(a). Are \(\{{\bf v_1, v_2, v_3}\}\) linearly independent?

We must determine if there is a nontrivial solution of the vector equation:

\[x_1{\bf v_1} + x_2{\bf v_2} + x_3{\bf v_3} = {\bf 0}.\]

Let’s row reduce the augmented matrix:

\[\left[\begin{array}{rrrc}1&4&2&0\\2&5&1&0\\3&6&0&0\end{array}\right] \sim \left[\begin{array}{rrrc}1&4&2&0\\0&-3&-3&0\\0&0&0&0\end{array}\right].\]

We can see that \(x_1\) and \(x_2\) are basic variables, and \(x_3\) is free.

Each nonzero value of \(x_3\) determines a nontrivial solution of the vector equation.

So \(\{{\bf v_1, v_2, v_3}\}\) are linearly dependent.

(b). To find the linear dependence relation among \({\bf v_1, v_2,}\) and \({\bf v_3},\) we continue the row reduction to obtain the reduced echelon form:

\[\left[\begin{array}{rrrc}1&4&2&0\\2&5&1&0\\3&6&0&0\end{array}\right] \sim \left[\begin{array}{rrrc}1&4&2&0\\0&-3&-3&0\\0&0&0&0\end{array}\right] \sim \left[\begin{array}{rrrc}1&0&-2&0\\0&1&1&0\\0&0&0&0\end{array}\right]\]

Which denotes the system of equations:

\[\begin{array}{rrrcl}x_1&&-2x_3&=&0\\&x_2&+x_3&=&0\\&&0&=&0\end{array}\]

So \(x_1 = 2x_3, x_2 = -x_3,\) and \(x_3\) is free.

We can choose any nonzero value for \(x_3\) – say, \(x_3 = 5\). Then \(x_1 = 10\) and \(x_2 = -5\). This gives us the solution:

\[ 10{\bf v_1} - 5{\bf v_2} + 5{\bf v_3} = {\bf 0}.\]

This is one (out of infinitely many) linear dependence relations among \({\bf v_1, v_2,}\) and \({\bf v_3}.\)

Linear Independence of Matrix Columns

The columns of a matrix are a set of vectors, so our definition of linear dependence extends naturally to them.

In particular if \(A = [{\bf a_1}\;\dots\;{\bf a_n}]\) then

\[x_1{\bf a_1} + ... + x_n{\bf a_n} = {\bf 0}\]

can be written as

\[A{\bf x} = {\bf 0}.\]

So each linear dependence relation among the columns of \(A\) corresponds to a nontrivial solution of \(A{\bf x} = {\bf 0}.\)

Putting it another way: the columns of the matrix \(A\) are linearly independent if and only if the equation \(A{\bf x} = {\bf 0}\) has only the trivial solution \({\bf x} = {\bf 0}\).

So, let’s connect this to what we know about solutions of linear systems:

- \(A{\bf x} = {\bf 0}\) is always consistent; that is, it always has the solution \({\bf x} = {\bf 0}\) (at least).

- The columns of \(A\) are linearly independent if and only if the only solution of \(A{\bf x} = {\bf 0}\) is \({\bf x} = {\bf 0}\).

- So: The columns of \(A\) are linearly dependent if and only if \(A{\bf x} = {\bf 0}\) has an infinite solution set.

So we can also say: the columns of \(A\) are linearly dependent if and only if

- The solution set of \(A{\bf x} = {\bf 0}\) has a free variable, or

- in other words, \(A\) does not have a pivot in every column.

Another Interpretation of Linear Dependence

Here is another way of thinking about linear dependence.

Theorem. A set \(S = \{{\bf v_1, ..., v_p}\}\) of two or more vectors is linearly dependent if and only if at least one of the vectors in \(S\) is a linear combination of the others.

Proof.

First, let’s consider the “if” part:

Assume \({\bf v_p} = c_1{\bf v_1} + \dots + c_{p-1} {\bf v_{p-1}}.\)

Then clearly

\[c_1{\bf v_1} + \dots + c_{p-1} {\bf v_{p-1}} - {\bf v_p} = {\bf 0},\]

and not all the coefficients are zero (the coefficient of \({\bf v_p}\) is \(-1\)). Thus, the vectors are linearly dependent.

Now, we consider the “only if” part:

Assume \(S\) is linearly dependent. Then \(c_1{\bf v_1} + \dots + c_p{\bf v_p} = 0\) and at least one of the \(c_i\) is nonzero.

Pick one of the nonzero \(c_i,\) and rearranging, we get:

\[{\bf v_i} = -(c_1/c_i){\bf v_1} + \dots + -(c_p/c_i){\bf v_p}\]

Thus, there is at least one vector that is a linear combination of the others.

Spanning Sets and Linear Dependence

Let’s return to the motivation at the start of the lecture, and formalize the connection between spanning sets and linear dependence.

We’ll start with two linearly independent vectors \({\bf u} = \left[\begin{array}{r}3\\1\\0\end{array}\right]\) and \({\bf v} = \left[\begin{array}{r}1\\6\\0\end{array}\right].\)

Let’s

- describe the set spanned by \({\bf u}\) and \({\bf v}\) and

- explain why a vector \({\bf w}\) is in Span\(\{\bf u, v\}\) if and only if \(\{\bf u, v, w\}\) is linearly dependent.

Solution.

The vectors \({\bf u}\) and \({\bf v}\) are linearly independent so they span a plane in \(\mathbb{R}^3\).

In fact, since \(x_3 = 0\) in both vectors, they span the \(x_1x_2\) plane.

Now, if \({\bf w}\) is in Span\(\{\bf u, v\}\), then \({\bf w}\) is a linear combination of \({\bf u}\) and \({\bf v}\).

So then \(\{\bf u, v, w\}\) is linearly dependent (by the Theorem we just proved).

And conversely, if \(\{\bf u, v, w\}\) is linearly dependent, then there exist \(c_1, c_2,\) and \(c_3,\) not all zero, such that

\[ c_1 {\bf u} + c_2 {\bf v} + c_3 {\bf w} = {\bf 0}.\]

We know that \({\bf u}\) and \({\bf v}\) are linearly independent, so the only way for \(c_1 {\bf u} + c_2{\bf v}\) to be zero is if \(c_1 = c_2 = 0\).

So \(c_1 {\bf u} + c_2{\bf v}\) must be nonzero, and that means \(c_3\) must be different from zero.

So we can write:

\[ {\bf w} = -(c_1/c_3) {\bf u} - (c_2/c_3) {\bf v},\]

that is, \({\bf w}\) is a linear combination of \({\bf u}\) and \({\bf v}\), and therefore lies in Span\(\{\bf u, v\}\).

So we conclude:

- If a set of vectors \(\{\bf v_1, v_2, \dots, v_p\}\) is linearly dependent, then at least one of them lies within the span of the others, and

- If one vector in the set \(\{\bf v_1, v_2, \dots, v_p\}\) lies within the span of the others, then the set is linearly dependent.

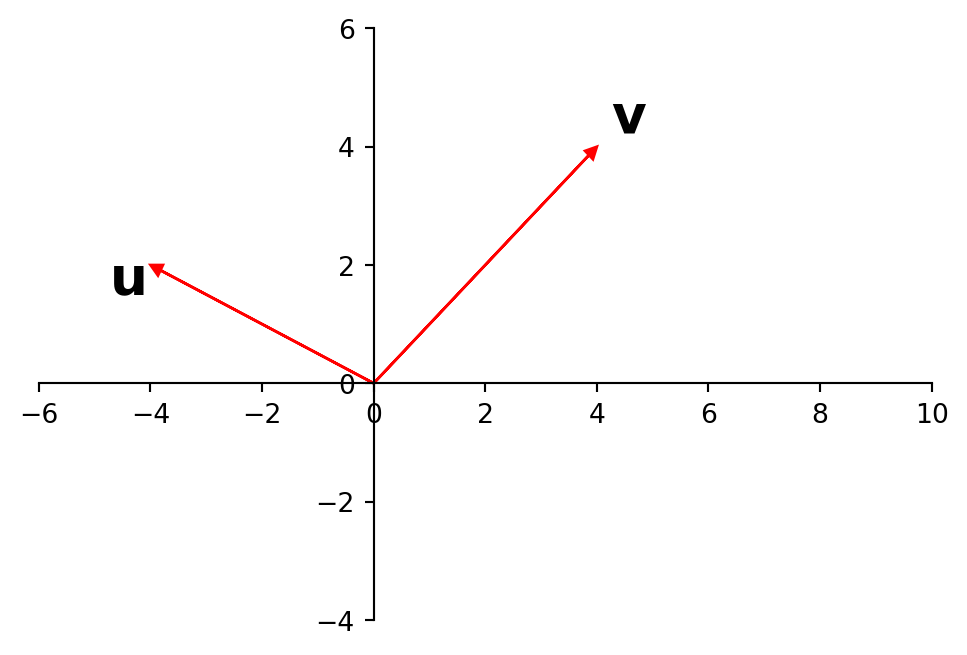

Linear Dependence Geometrically: \(\mathbb{R}^2\)

Now let’s try to get a geometric sense of linear dependence.

We’ll use \(\mathbb{R}^2\) for visualization.

Let’s try to interpret linear dependence directly in terms of the definition, which involves vector sums.

The vectors \(\{{\bf u},{\bf v}\}\) are independent because there is no nozero combination of them that yields the origin.

A nonzero combination of \({\bf u}\) and \({\bf v}\) geometrically means moving in the direction of \({\bf u}\) or \({\bf v}\) or both. There is no way to move in the direction of \({\bf u}\) and then in the direction of \({\bf v}\) and arrive back at the origin.

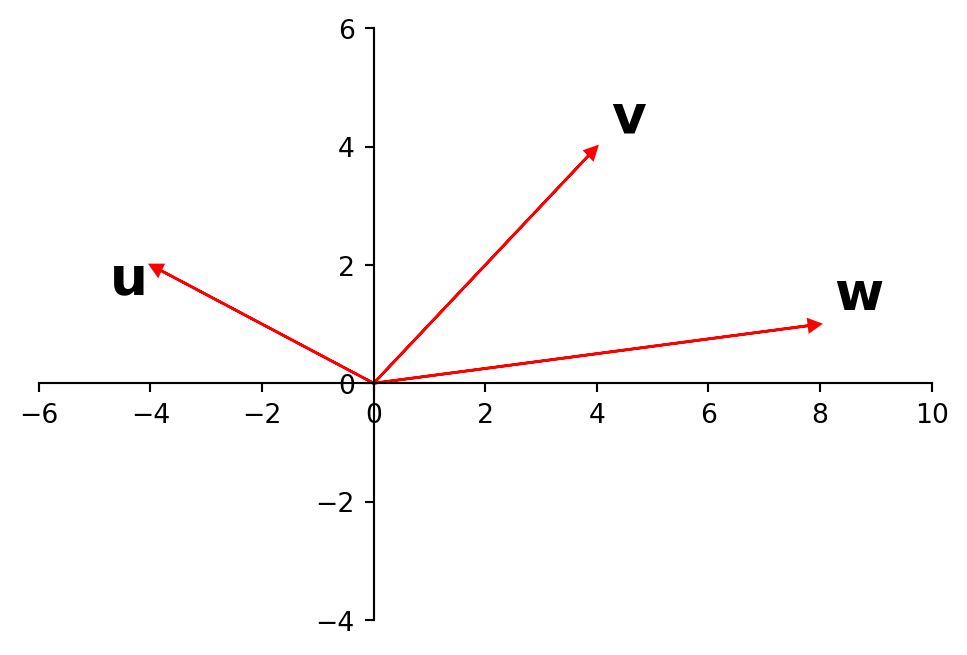

Now let’s add another vector \({\bf w}\):

Now the situation is different. The set \({\bf u, v, w}\) is linearly dependent.

There are nonzero moves along the three directions that can bring you back to the origin:

This is a geometric interpretation of the equation

\[ c_1{\bf u} + c_2{\bf v} + c_3{\bf w} = 0.\]

The last example suggested that three vectors in \(\mathbb{R}^2\) are linearly dependent.

This is in fact always true, and furthermore, we can generalize to \(\mathbb{R}^n\).

Theorem. If a set contains more vectors than there are entries in each vector, then the set is linearly dependent.

That is, any set \(\{{\bf v_1}, \dots, {\bf v_p}\}\) in \(\mathbb{R}^n\) is linearly dependent if \(p>n.\)

Proof. Let \(A = [{\bf v_1} \; \dots \; {\bf v_p}].\) Then \(A\) is \(n \times p\), and the equation \(A{\bf x} = {\bf 0}\) corresponds to a system of \(n\) equations in \(p\) unknowns.

If \(p>n,\) there are more variables than equations, so there must be a free variable.

Hence \(A{\bf x} = {\bf 0}\) has a nontrivial solution, and the columns of \(A\) are linearly dependent.

Example.

The vectors \(\left[\begin{array}{r}2\\1\end{array}\right], \left[\begin{array}{r}4\\-1\end{array}\right],\) and \(\left[\begin{array}{r}-2\\2\end{array}\right]\) are linearly dependent because these vectors live in \(\mathbb{R}^2\) and there are 3 of them.

Here is something else that we can say:

Theorem. If a set \(S = \{{\bf v_1, ..., v_p}\}\) in \(\mathbb{R}^n\) contains the zero vector, then the set is linearly dependent.

Proof. Let’s say \({\bf v_1} = {\bf 0}\). Then \(1{\bf v_1} + 0{\bf v_2}+ \dots + 0{\bf v_p} = {\bf 0}.\) The coefficients are not all zero, so the set is linearly dependent.

Application Example: Network Flow

Systems of linear equations arise when considering flow through a network.

A network is a set of nodes and links. Links connect nodes.

Be aware that there is another set of terminology that is used more often in theoretical computer science and mathematics: A graph , which consists of vertices and edges. A network and a graph are exactly the same thing.

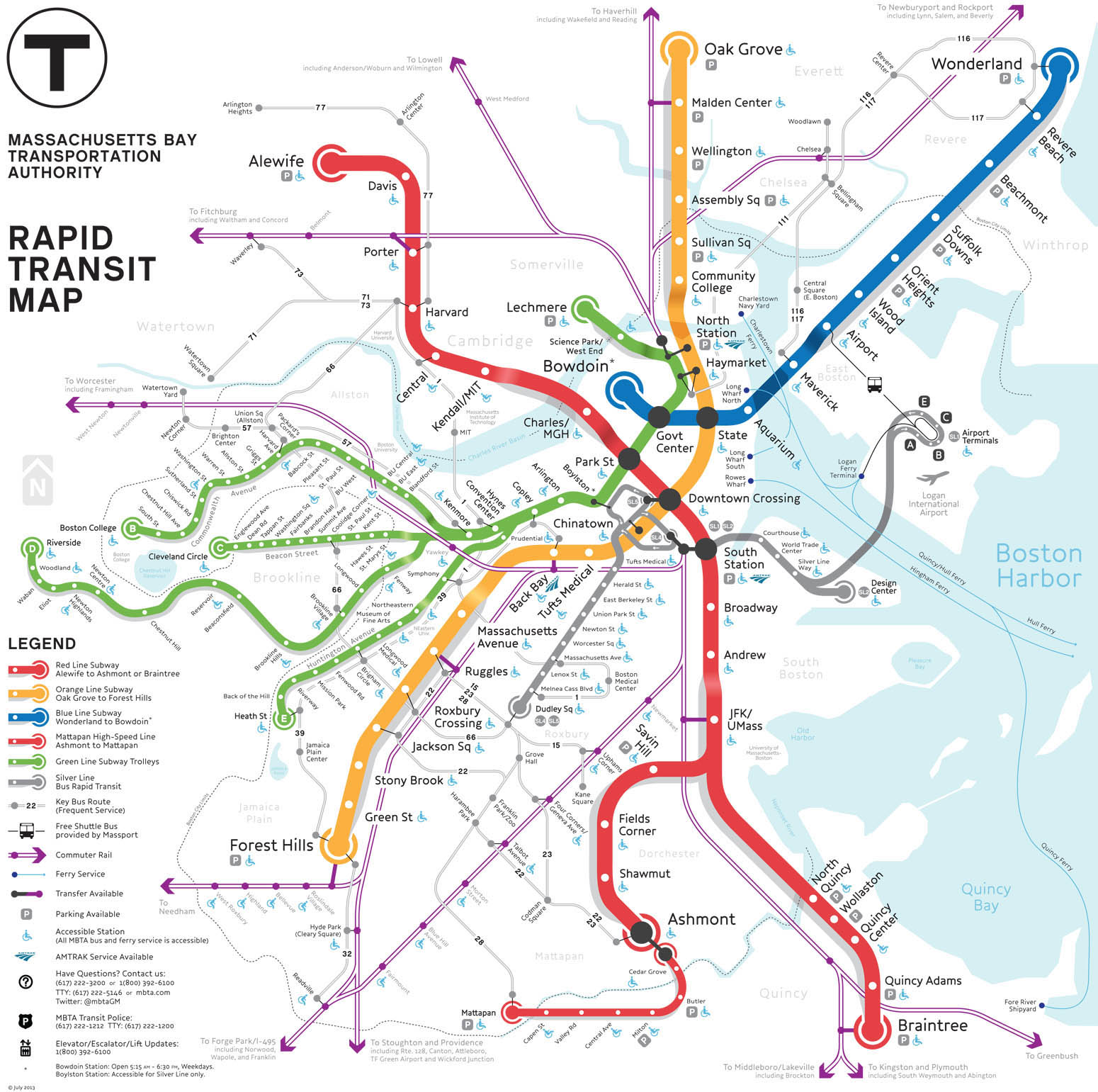

Many times we are interested in flow through a network. Flows can represent movement from place to place. For example, consider this map of the MBTA:

We can think of T stops as nodes and rail connections as links. Flow corresponds to the movement of subway cars from station to station.

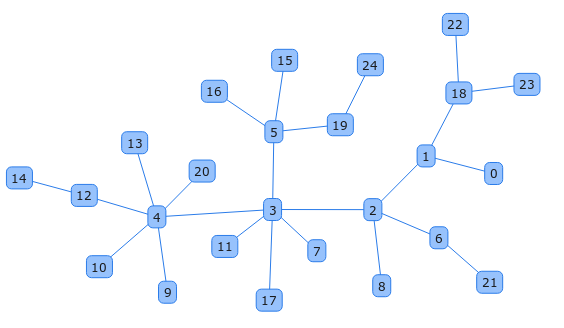

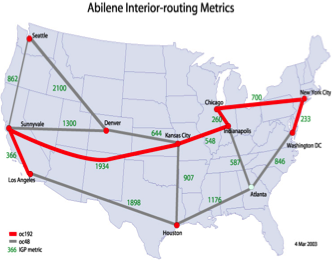

Here is another example: this is a representation of the Abilene data network, which is used by universities to exchange data traffic (packets) within the US:

Networks usually obey the rule of “flow balance” or “conservation of flow.”

This simply means that the amount of flow going into a node equals the amount coming out.

For example, the number of packets that enter any node of the Abilene network equals the number that leave that node. This reflects the fact that packets are not created or destroyed within the network.

Image Source: Lay, 4th edition.

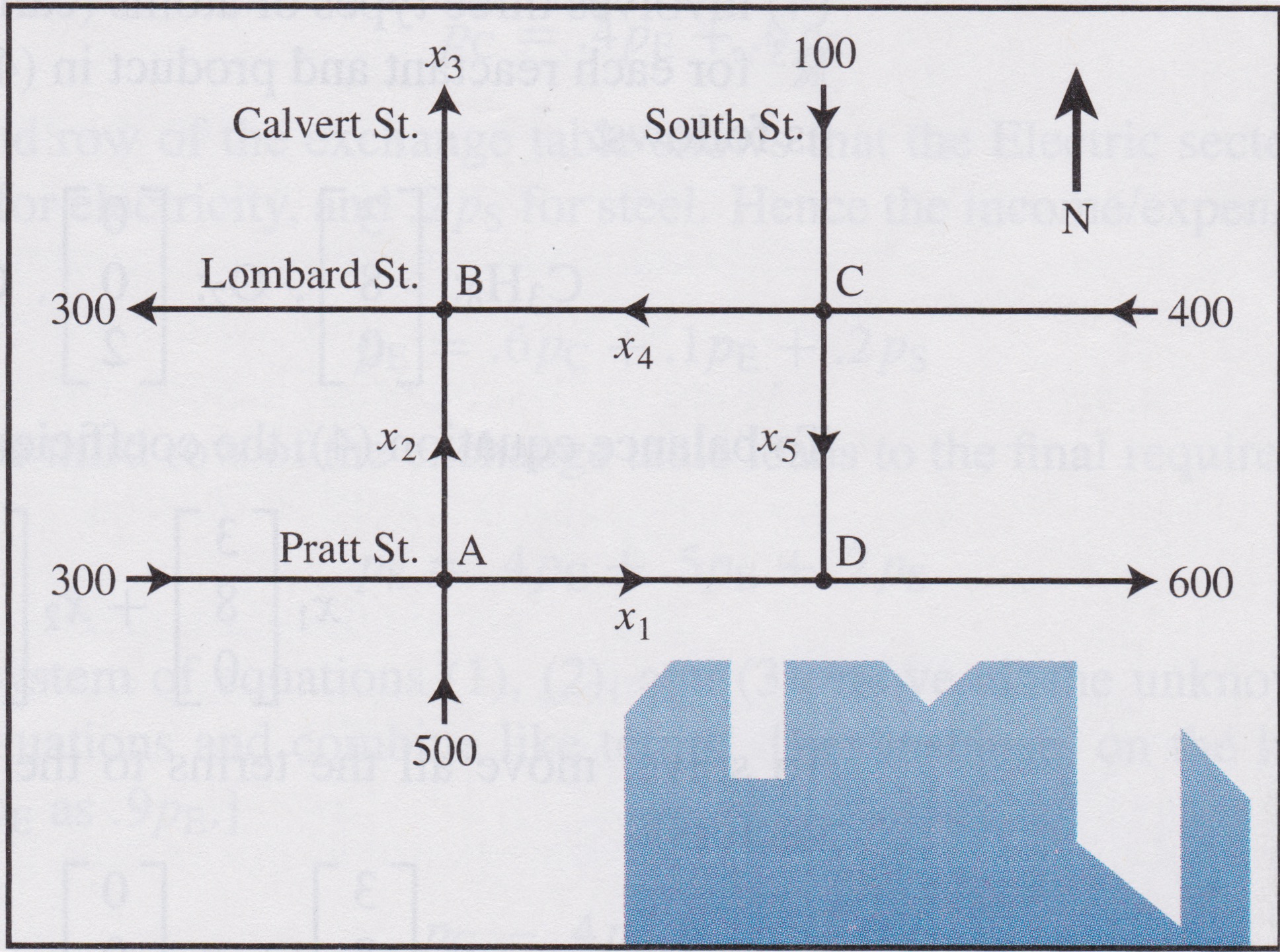

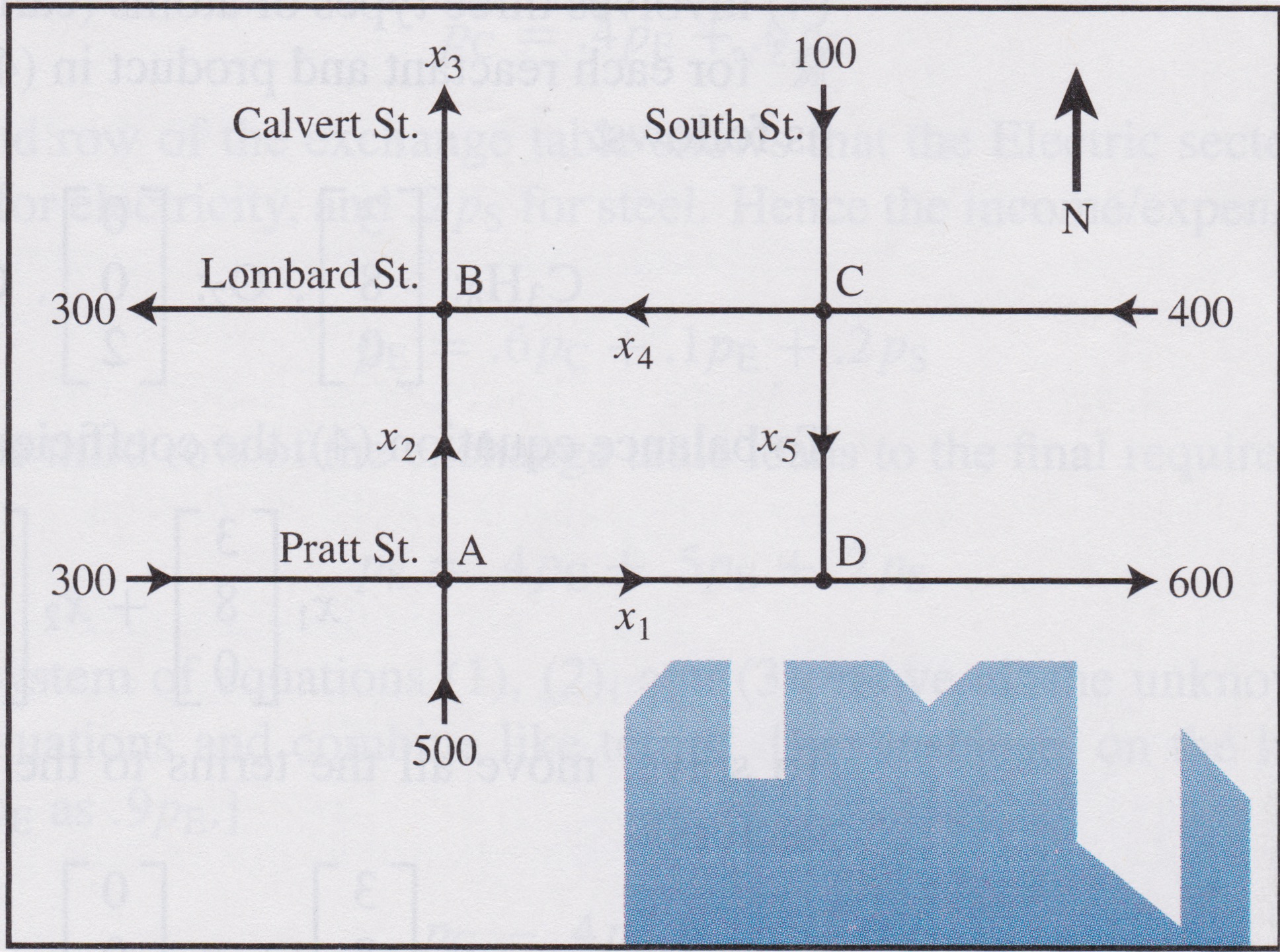

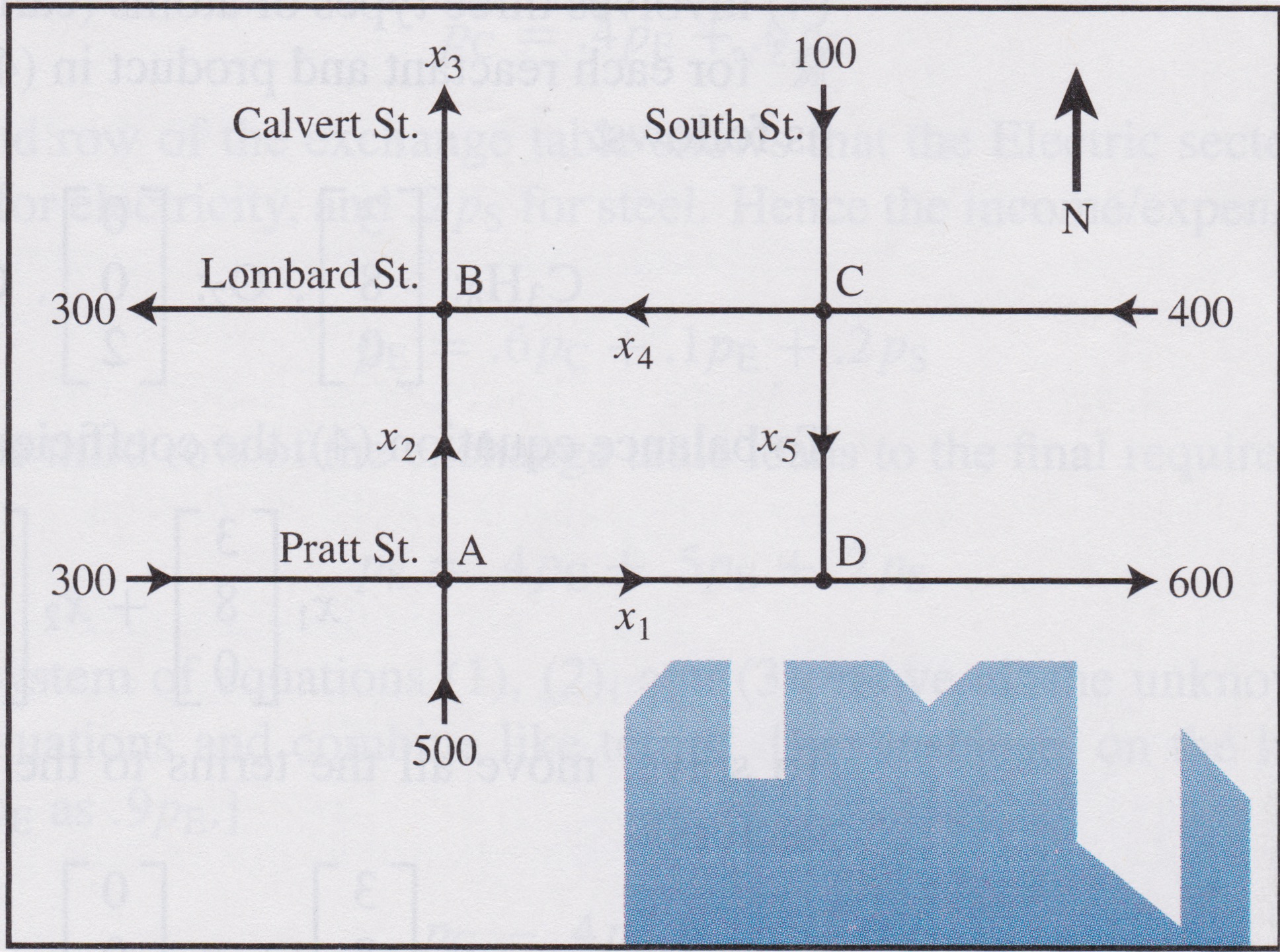

Here is another example: a simplified view of downtown Baltimore during rush hour.

The flows are vehicles per hour.

Note that some of the traffic flows are measured, and some are not. The unmeasured flows are marked with symbols \(x_1, x_2,\) etc.

We’d like to understand the traffic pattern in the city, despite the presence of unmeasured flows. We can do that by using the principle of flow balance.

The key idea is: flow balance dictates that every node determines a linear equation. The equation is of the form:

\[ \text{Flow in} = \text{Flow out}.\]

| Intersection | Flow in | Flow out |

|---|---|---|

| A | 300 + 500 | \(x_1 + x_2\) |

| B | \(x_2 + x_4\) | 300 + \(x_3\) |

| C | 100 + 400 | \(x_4 + x_5\) |

| D | \(x_1 + x_5\) | 600 |

| network | 1300 | 900 + \(x_3\) |

The last line indicates that the total flow into the network equals the total flow out of the network, which means that \(x_3 = 400.\)

This yields the following system of equations:

\[\begin{array}{rrrrrcl} x_1 & + x_2 & & & & = & 800\\ & x_2 & -x_3 & + x_4 & & = &300\\ &&&x_4 & +x_5 &=& 500\\ x_1 &&&& + x_5 &=& 600\\ &&x_3&&&=&400\\ \end{array}\]

When we row reduce the associated augmented matrix, the reduced echelon form yields these equations:

\[\begin{array}{rrrrrcl} x_1 & & & & +x_5 & = & 600\\ & x_2 & & & -x_5 & = &200\\ && x_3 & & &=& 400\\ &&& x_4 & + x_5 &=& 500\\ \end{array}\]

Thus the general flow pattern for the network is described by

\[\left\{\begin{array}{l} x_1 = 600 - x_5\\ x_2 = 200 + x_5\\ x_3 = 400\\ x_4 = 500 - x_5\\ x_5 \text{ is free}\\ \end{array}\right.\]

How can we interpret this result?

A negative flow corresponds to a flow in the opposite direction assumed in the diagram. Since these streets are one-way, none of the variables here can be negative. So, for example, \(x_5 \leq 500\) because \(x_4\) cannot be negative.