Distances and Timeseries

Contents

%matplotlib inline

%config InlineBackend.figure_format='retina'

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from IPython.display import Image, display_html, display, Math, HTML;

Distances and Timeseries#

Today we will start building some tools for making comparisons of data objects, with particular attention to timeseries.

Working with data, we can encounter a wide variety of different data objects:

Records of users

Graphs

Images

Videos

Text (webpages, books)

Strings (DNA sequences)

Timeseries

…

How can we compare them?

Feature space representation#

Usually a data object consists of a set of attributes.

These are also commonly called features.

(“J. Smith”, 25, $ 200,000)

(“M. Jones”, 47, $ 45,000)

If all \(d\) dimensions are real-valued then we can visualize each data object as a point in a \(d\)-dimensional vector space.

(25, USD 200000)\(\rightarrow \begin{bmatrix}25\\200000\end{bmatrix}\).

Likewise If all features are binary then we can think of each data object as a binary vector in vector space.

The space is called feature space.

Vector spaces are such a useful tool that we often use them even for non-numeric data.

For example, consider a categorical variable that can be only one of “house”, “tree”, “moon”.

For such a variable, we can use a one-hot encoding.

We would encode as follows:

house: \([1, 0, 0]\)tree: \([0, 1, 0]\)moon: \([0, 0, 1]\)

So an encoding of (25, USD 200000, 'house')

could be:

We will see many other encodings that take non-numeric data and encode into vectors or matrices.

For example, there are vector or matrix encodings for:

Graphs

Images

Text

etc.

Similarity#

We then are naturally interested in how similar or dissimilar two objects are.

A dissimilarity function takes two objects as input, and returns a large value when the two objects are not very similar.

Often we put restrictions on the dissimilarity function.

One of the most common is that it be a metric.

The dissimilarity \(d(x, y)\) between two objects \(x\) and \(y\) is a metric if

\(d(i, j) = 0 \leftrightarrow i == j\;\;\;\;\;\;\;\;\) (identity of indiscernables)

\(d(i, j) = d(j, i)\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\) (symmetry)

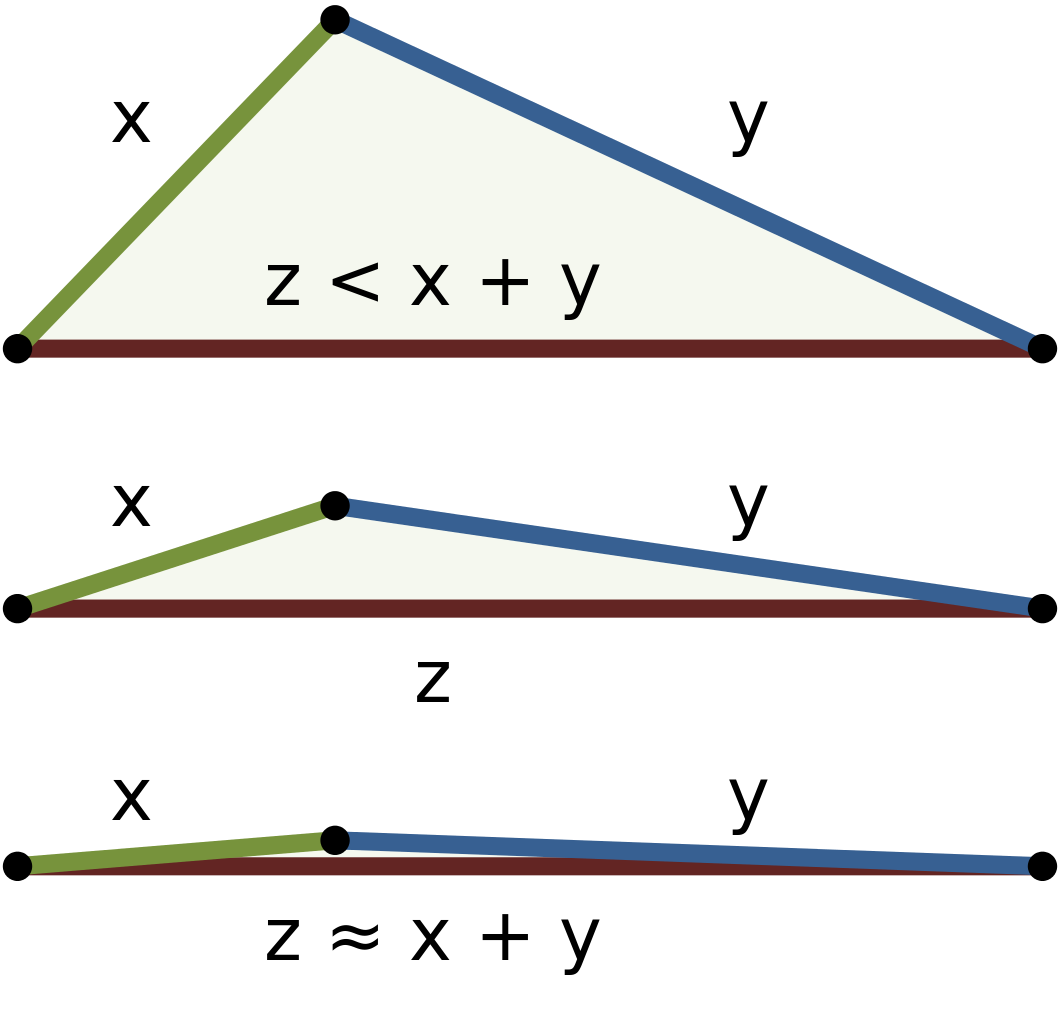

\(d(i, j) \leq d(i, h)+d(h, j)\;\;\) (triangle inequality)

By WhiteTimberwolf, Brews ohare (PNG version) - PNG version, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=26047092

A metric is also commonly called a distance.

Sometimes we will use “distance” informally, ie, to refer to a dissimilarity function even if we are not sure it is a metric.

We’ll try to say “dissimilarity” in those cases though.

Why is it important or valuable for a dissimilarity to be a metric?

The additional constraints allow us to reason about and more easily visualize the data.

The main way this happens is through the triangle inequality.

The triangle inequality basically says, if two objects are “close” to another object, then they are “close” to each other.

This is not always the case for real data, but when it is true, it can really help.

Definitions of distance or dissimilarity functions are usually diferent for real, boolean, categorical, and ordinal variables.

Weights may be associated with diferent variables based on applications and data semantics.

Matrix representation#

Very often we will manage data conveniently in matrix form.

The standard way of doing this is:

Where we typically use symbols \(m\) for number of rows (objects) and \(n\) for number of columns (features).

When we are working with distances, the matrix representation is:

Norms#

Assume some function \(p(\mathbf{v})\) which measures the “size” of the vector \(\mathbf{v}\).

\(p()\) is called a norm if:

\(p(a\mathbf{v}) = |a|\; p(\mathbf{v})\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\) (absolute scaling)

\(p(\mathbf{u} + \mathbf{v}) \leq p(\mathbf{u}) + p(\mathbf{v})\;\;\;\;\;\;\;\;\;\;\) (subadditivity)

\(p(\mathbf{v}) = 0 \leftrightarrow \mathbf{v}\) is the zero vector \(\;\;\;\;\)(separates points)

Norms are important for this reason, among others:

Every norm defines a corresponding metric.

That is, if \(p()\) is a norm, then \(d(\mathbf{x}, \mathbf{y}) = p(\mathbf{x}-\mathbf{y})\) is a metric.

Useful Norms#

A general class of norms are called \(\ell_p\) norms, where \(p \geq 1.\)

Notice that we use this notation for the \(p\)-norm of a vector \(\mathbf{x}\):

The corresponding distance that an \(\ell_p\) norm defines is called the Minkowski distance.

A special – but very important – case is the \(\ell_2\) norm.

We’ve already mentioned it: it is the Euclidean norm.

The distance defined by the \(\ell_2\) norm is the same as the Euclidean distance between the vectors.

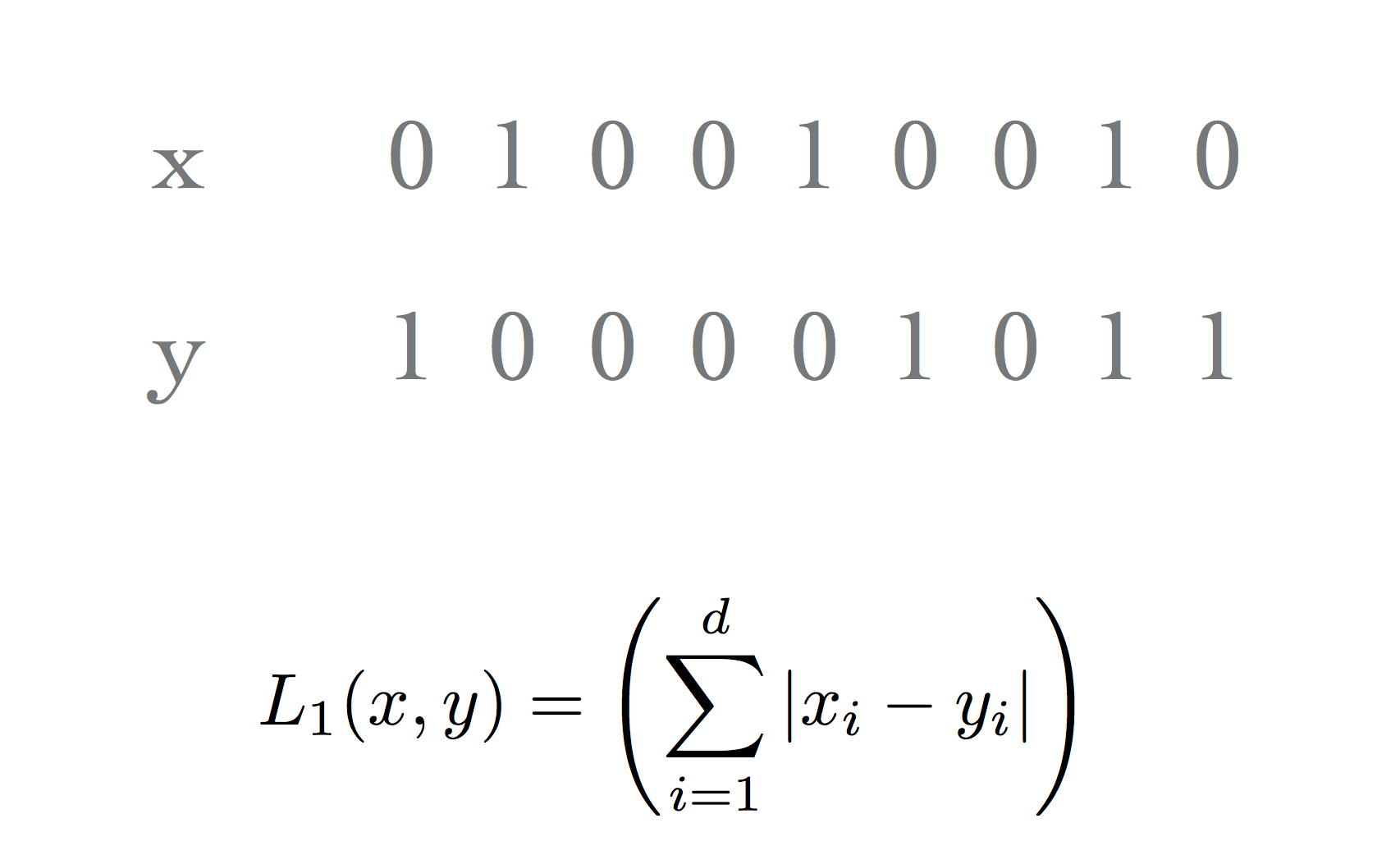

Another important special case is the \(\ell_1\) norm.

This defines the Manhattan distance, or (for binary vectors), the Hamming distance:

If we take the limit of the \(\ell_p\) norm as \(p\) gets large we get the \(\ell_\infty\) norm.

The value of the \(\ell_\infty\) norm is simply the largest element in a vector.

What is the metric that this norm induces?

Another related idea is the \(\ell_0\) “norm,” which is not a norm, but is in a sense what we get from the \(p\)-norm for \(p = 0\).

Note that this is not a norm, but it gets called that anyway.

This “norm” simply counts the number of nonzero elements in a vector.

This is called the vector’s sparsity.

Here is the notion of a “circle” under each of three norms.

That is, for each norm, the set of vectors having norm 1, or distance 1 from the origin.

CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=678101

Similarity and Dissimilarity#

From linear algebra, we learn that the inner product of two vectors can be used to compute the cosine of the angle between them:

Note that this value is large when \(\mathbf{x} \approx \mathbf{y}.\) So it is a similarity function.

We often find that we have a similarity function \(s\) and need to convert it to a dissimilarity function \(d\).

Two straightforward ways of doing that are:

or

…for some properly chosen \(k\).

For cosine similarity, one often uses:

Note however that this is not a metric!

However if we recover the actual angle beween \(\mathbf{x}\) and \(\mathbf{y}\), that is a metric.

Bit vectors and Sets#

When working with bit vectors, the \(\ell_1\) metric is commonly used and is called the Hamming distance.

This has a natural interpretation: “how well do the two vectors match?”

Or: “What is the smallest number of bit flips that will convert one vector into the other?”

In other cases, the Hamming distance is not a very appropriate metric.

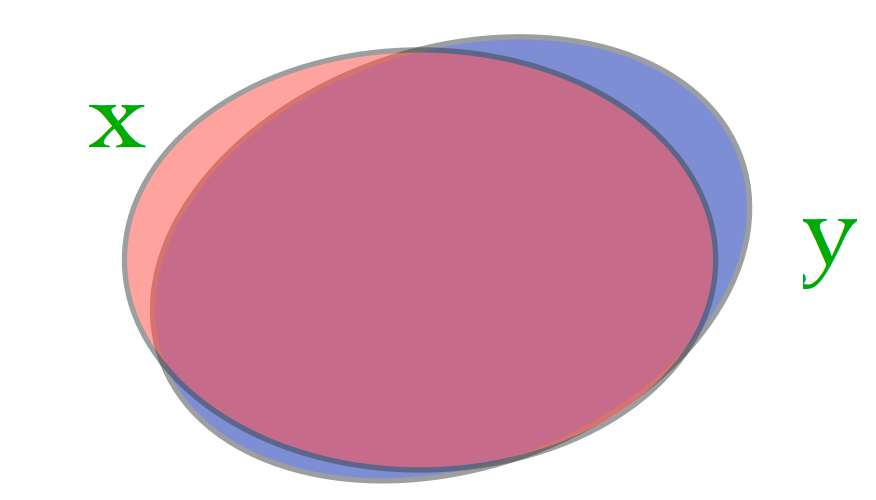

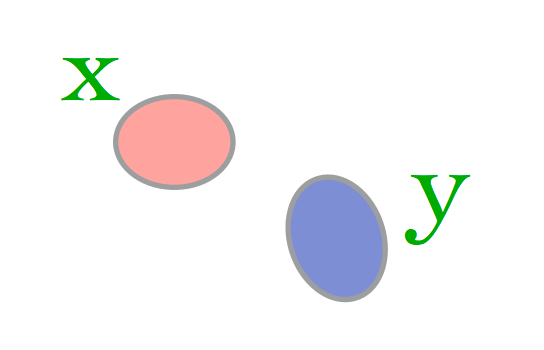

Consider the case in which the bit vector is being used to represent a set.

In that case, Hamming distance measures the size of the set difference.

For example, consider two documents. We will use bit vectors to represent the sets of words in each document.

Case 1: both documents are large, almost identical, but differ in 10 words.

Case 2: both documents are small, disjoint, have 5 words each.

The situation can be represented as this:

What matters is not just the size of the set difference, but the size of the intersection as well.

This leads to the Jaccard similarity:

This takes on values from 0 to 1, so a natural dissimilarity metric is \(1 - J_{Sim}().\)

In fact, this is a metric!:

Consider our two cases:

Case 1: (very large almost identical documents)

Here \(J_{Sim}(\mathbf{x}, \mathbf{y})\) is almost 1.

Case 2: (small disjoint documents)

Here \(J_{Sim}(\mathbf{x}, \mathbf{y})\) is 0.

Time Series#

A time series is a sequence of real numbers, representing the measurements of a real variable at (equal) time intervals.

Stock prices

Volume of sales over time

Daily temperature readings

A time series database is a large collection of time series.

Similarity of Time Series#

How should we measure the “similarity” of two timeseries?

We will assume they are the same length.

Examples:

Find companies with similar stock price movements over a time interval

Find similar DNA sequences

Find users with similar credit usage patterns

Two Problems:

Defining a meaningful similarity (or distance) function.

Finding an efficient algorithm to compute it.

Norm-based Similarity Measures#

We could just view each sequence as a vector.

Then we could use a \(p\)-norm, eg \(\ell_1, \ell_2,\) or \(\ell_p\) to measure similarity.

Advantages:

Easy to compute - linear in the length of the time series (O(n)).

It is a metric.

Disadvantage:

May not be meaningful!

We may believe that \(\mathbf{ts1}\) and \(\mathbf{ts2}\) are the most “similar” pair of timeseries.

However, according to Euclidean distance:

while

Not good!

Feature Engineering#

In general, there may be different aspects of a timeseries that are important in different settings.

The first step therefore is to ask yourself “what is important about timeseries in my application?”

This is an example of feature engineering.

In other words, feature engineering is the art of computing some derived measure from your data object that makes its important properties usable in a subsequent step.

A reasonable approach may then to be:

extract the relevant features

use a simple method (eg, a norm) to define similarity over those features.

In the case above, one might think of using

Fourier coefficients (to capture periodicity)

Histograms

Or something else!

Dynamic Time Warping#

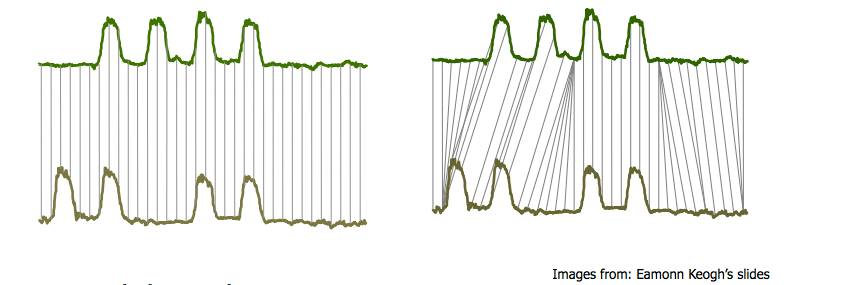

One case that arises often is something like the following: “bump hunting”

Both timeseries have the same key characteristics: four bumps.

But a one-one match (ala Euclidean distance) will not detect the similarity.

(Be sure to think about why Euclidean distance will fail here.)

A solution to this is called dynamic time warping.

The basic idea is to allow acceleration or deceleration of signals along the time dimension.

Classic applications:

Speech recognition

Handwriting recognition

Specifically:

Consider \(X = x_1, x_2, \dots, x_n\) and \(Y = y_1, y_2, \dots, y_n\).

We are allowed to extend each sequence by repeating elements to form \(X'\) and \(Y'\).

We then calculate, eg, Euclidean distance between the extended sequnces \(X'\) and \(Y'\)

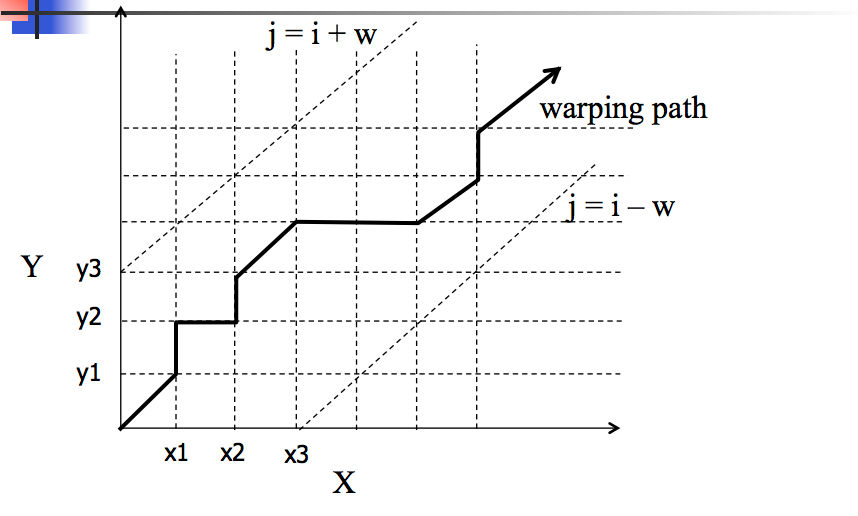

There is a simple way to visualize this algorithm.

Consider a matrix \(M\) where \(M_{ij} = |x_i - y_j|\) (or some other error measure).

\(M\) measures the amount of error we get if we match \(x_i\) with \(y_j\).

So we seek a path through \(M\) that minimizes the total error.

We need to start in the lower left and work our way up via a continuous path.

Basic restrictions on path:

Montonicity

path should not go down or to the left

Continuity

No elements may be skipped in sequence

This can be solved via dynamic programming. However, the algorithm is still quadratic in \(n\).

Hence, we may choose to put a restriction on the amount that the path can deviate from the diagonal.

The basic algorithm looks like this:

D[0, 0] = 0

for i in range(n):

for j in range(m):

D[i,j] = M[i,j] +

min( D[i-1, j], # insertion

D[i, j-1], # deletion

D[i-1, j-1] ) # match

Unfortunately, the algorithm is still quadratic in \(n\) – it is \(O(nm)\).

Hence, we may choose to put a restriction on the amount that the path can deviate from the diagonal.

This is implemented by not allowing the path to pass through locations where \(|i - j| > w\).

Then the algorithm is \(O(nw)\).

From Timeseries to Strings#

A closely related idea concerns strings.

The key point is that, like timeseries, strings are sequences.

Given two strings, one way to define a ‘distance’ between them is:

the minimum number of edit operations that are needed to transform one string into the other.

Edit operations are insertion, deletion, and substitution of single characters.

This is called edit distance or Levenshtein distance.

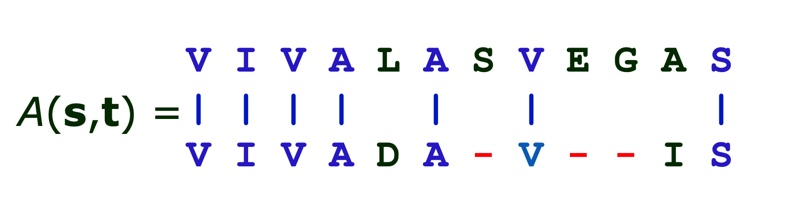

For example, given strings:

s = VIVALASVEGAS

and

t = VIVADAVIS

we would like to

compute the edit distance, and

obtain the optimal alignment

A dynamic programming algorithm can also be used to find this distance,

and it is very similar to dynamic time-warping.

In bioinformatics this algorithm is called “Smith-Waterman” sequence alignment.